Abstract

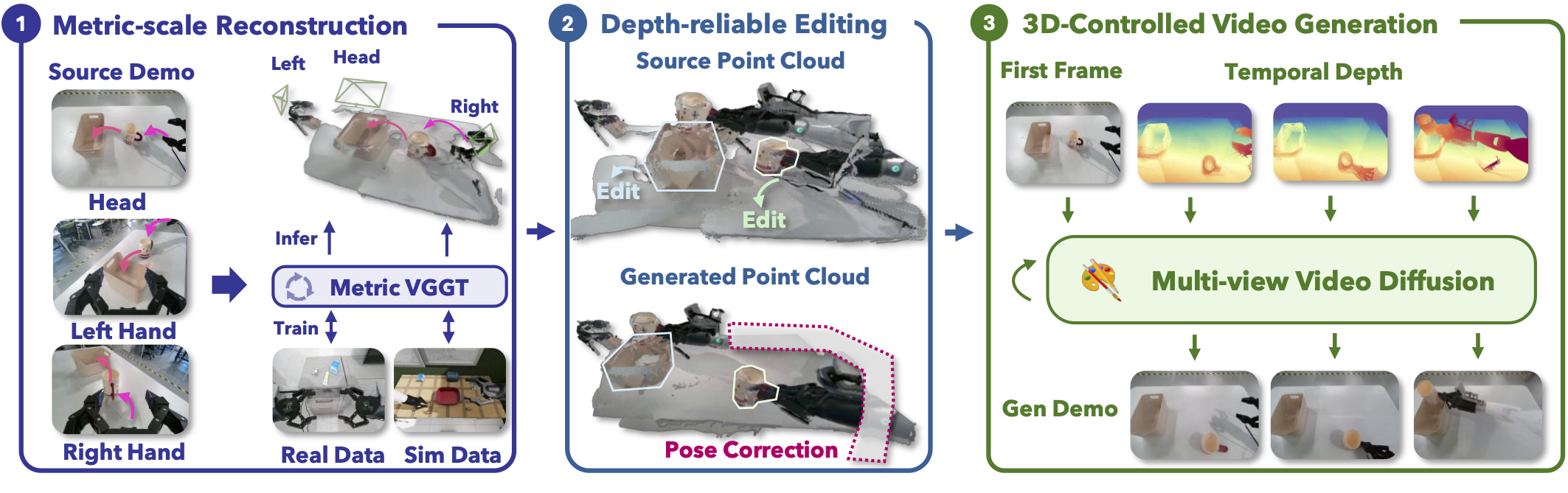

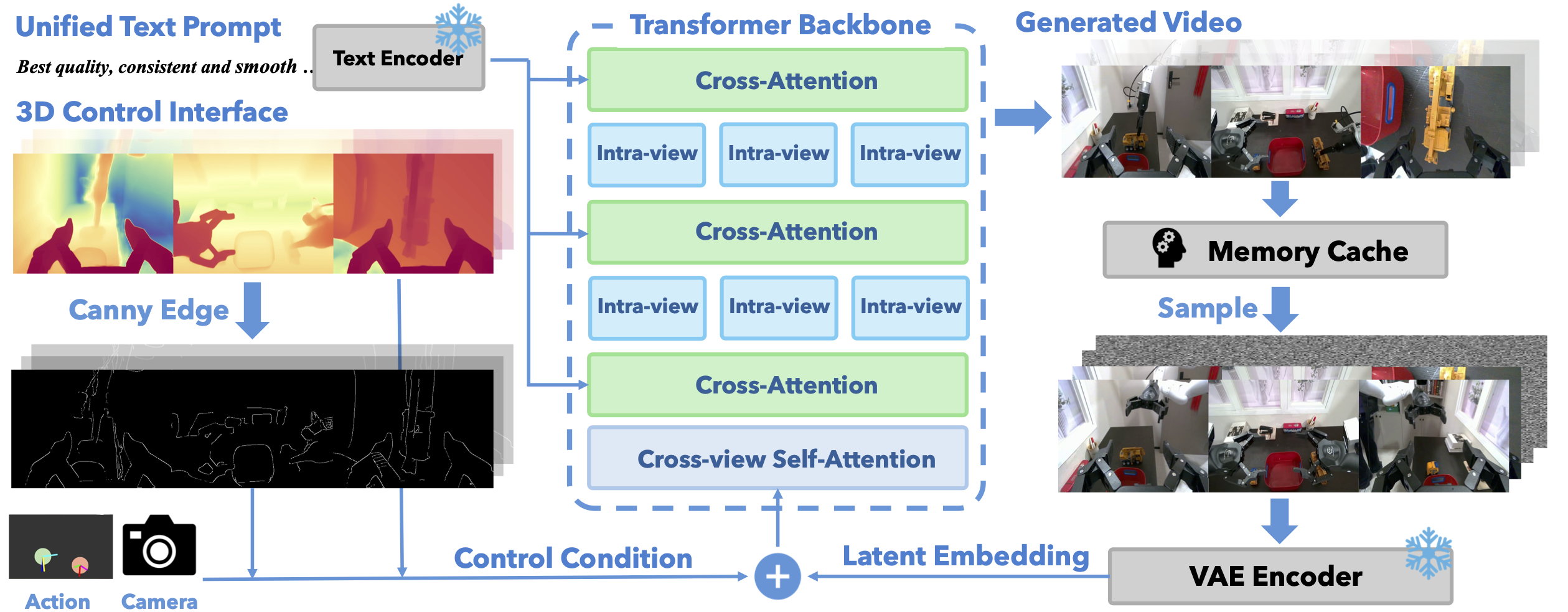

Recent progress in robot learning has been driven by large-scale datasets and powerful visuomotor policy architectures, yet policy robustness remains limited by the substantial cost of collecting diverse demonstrations, particularly for spatial generalization in manipulation tasks. To reduce repetitive data collection, we present Real2Edit2Real, a framework that generates new demonstrations by bridging 3D editability with 2D visual data through a 3D control interface. Our approach first reconstructs scene geometry from multi-view RGB observations with a metric-scale 3D reconstruction model. Based on the reconstructed geometry, we perform depth-reliable 3D editing on point clouds to generate new manipulation trajectories while geometrically correcting the robot poses to recover physically consistent depth, which serves as a reliable condition for synthesizing new demonstrations. Finally, we propose a multi-conditional video generation model guided by depth as the primary control signal, together with action, edge, and ray maps, to synthesize spatially augmented multi-view manipulation videos. Experiments on four real-world manipulation tasks demonstrate that policies trained on data generated from only 1-5 source demonstrations can match or outperform those trained on 50 real-world demonstrations, improving data efficiency by up to 10-50×. Moreover, experimental results on height and texture editing demonstrate the framework's flexibility and extensibility, indicating its potential to serve as a unified data generation framework.

Method

Figure 1. The framework of Real2Edit2Real. (1) Reconstruct metric-scale geometry from multi-view observations. (2)Synthesize novel trajectories with reliable depth rendering. (3) Generate demonstrations controlled by temporal depth signals.

Figure 2. The framework of 3D-Controlled Video Generation. We utilize depth as the 3D control interface, in conjunction with edges, actions, and ray maps, to guide the generation of multi-view demonstrations.

Generated Demonstrations

Above Videos are with 2× speed up.

Policy From Generated Data

Above Videos are with 5× speed up.

BibTeX

@article{zhao2025real2edit2real,

title={Real2Edit2Real: Generating Robotic Demonstrations via a 3D Control Interface},

author={Yujie Zhao and Hongwei Fan and Di Chen and Shengcong Chen and Liliang Chen and Xiaoqi Li and Guanghui Ren and Hao Dong},

year={2025},

eprint={2512.19402},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2512.19402},

}Join Us

We are hiring innovative undergraduate/graduate students, working on robotics and world model. If you are interested, don't hesitate to send E-mail to: hwfan25[at]stu.pku.edu.cn or hao.dong[at]pku.edu.cn